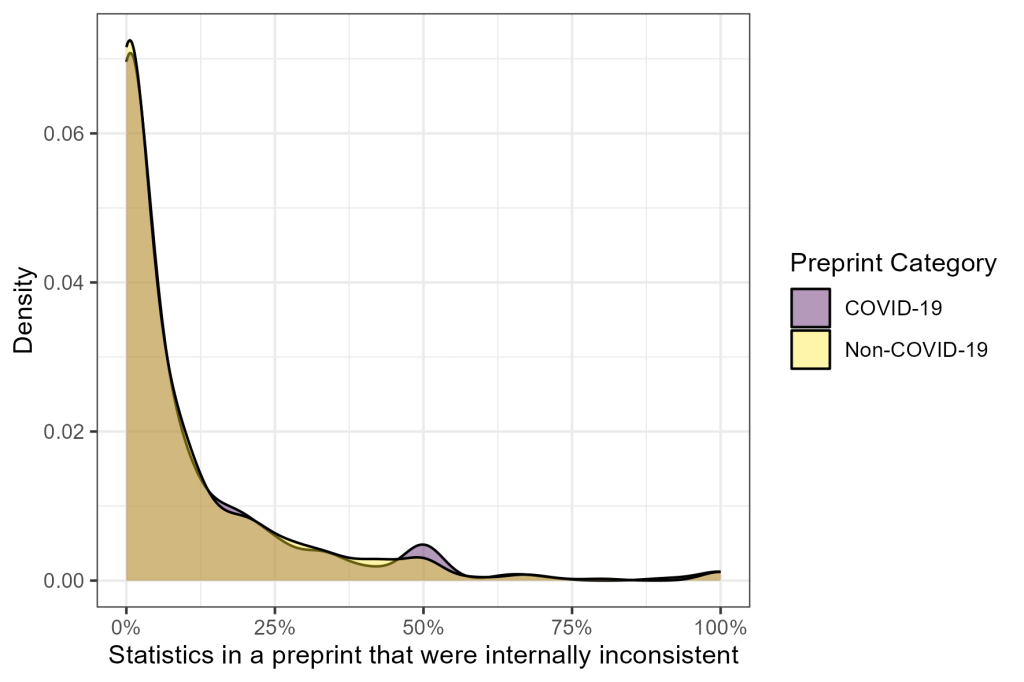

The COVID-19 outbreak has led to an exponential increase of publications and preprints about the virus, its causes, consequences, and possible cures. COVID-19 research has been conducted under high time pressure and has been subject to financial and societal interests. Doing research under such pressure may influence the scrutiny with which researchers perform and write up their studies. Either researchers become more diligent, because of the high-stakes nature of the research, or the time pressure may lead to cutting corners and lower quality output.

In this study, we conducted a natural experiment to compare the prevalence of incorrectly reported statistics in a stratified random sample of COVID-19 preprints and a matched sample of non-COVID-19 preprints.

Our results show that the overall prevalence of incorrectly reported statistics is 9-10%, but frequentist as well as Bayesian hypothesis tests show no difference in the number of statistical inconsistencies between COVID-19 and non-COVID-19 preprints.

Taken together with previous research, our results suggest that the danger of hastily conducting and writing up research lies primarily in the risk of conducting methodologically inferior studies, and perhaps not in the statistical reporting quality.

You can find the full preprint here: https://psyarxiv.com/asbfd/.