We know that p-hacking and publication bias are bad. But how bad? And in what ways could they affect our conclusions?

Proud to report that my PhD student Esther Maassen published a new preprint: “The Impact of Publication Bias and Single and Combined p-Hacking Practices on Effect Size and Heterogeneity Estimates in Meta-Analysis“.

It’s a mouthful, but this nicely reflects the level of nuance in her conclusions. Some key findings include:

- Publication bias is very bad for effect size estimation

- Not all p-hacking strategies are equally detrimental to effect size estimation. For instance, even though optional stopping may be terrible for your Type I Error rate, it does not add much bias to effect size estimation. Selective outcome reporting and optional dropping of specific types of participants, on the other hand, are really really bad.

- Heterogeneity was also impacted by p-hacking, but sometimes in surprising ways. Turns out: heterogeneity is a complex concept!

Her work includes a custom Shiny app, where users can see the impact of publication bias and p-hacking in their own scenarios: https://emaassen.shinyapps.io/phacking/.

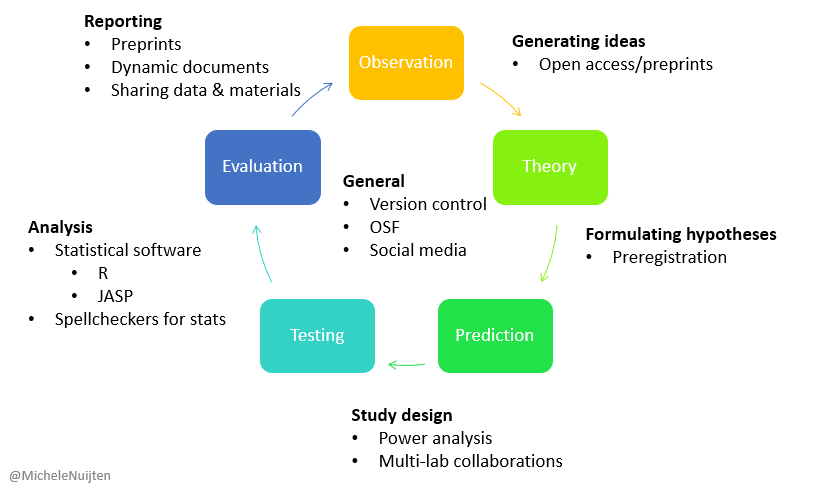

Take-away: we need systemic change to promote open and robust scientific practices that avoid publication bias and p-hacking.